Part 1 of this article was related to using the JSP precompilation and tuning the recompilation settings provided in Weblogic.

Now, lets take a look at the other parameters that require tuning and setting correctly, in order for the Weblogic server to be generating acceptable performance of your site.

Usually the problem statement is similar to this:

1. The site regularly stops responding and all available execute threads are consumed. All future requests fail to be handled and a server restart is required.

2. The page response times of the entire site are too high and need to be brought down to a more useable level.

Note: An

additional post has been published which provides a skeleton Action Plan for analyzing the entire slow site/high CPU issue including managing stakeholder expectations and appropriate reporting.

Operating System ReviewA review of the Operating System configuration needs to look at

a) The number of file descriptors available and whether that matches the value recommended for Weblogic

b) Various TCP settings (also called NDD parameters) which will affect how long it takes to recycle a closed or dropped connection.

2 top settings are:

• File Descriptor limit: increase to 8192 if not 65536. A detailed

follow-up on File Descriptors is published here.

• tcp_time_wait_interval: change to 60000 [The default in Solaris 8 is 4 minutes used to keep a socket open in TIME_WAIT state, even after the response is provided to the client, set this down to 1 minute. The default in Solaris 9 is 1 minute]

Check the

Oracle site for the latest recommended values Database Usage and JDBC Driversa) If you get Out Of Memory errors occurring in JDBC calls, it is recommended that the JDBC driver be upgraded to the latest version.

b) Prepared Statement caching is a feature that allows prepared statements to be held in a cache on the database so they do not have to be re-parsed for each call. This functionality needs to be enabled per Connection Pool and can have a significant impact on the performance of the pools. But this needs to be validated with a focussed round of Performance testing.

It should be noted that for every Prepared Statement that is held in cache, a cursor is held open on the database for each connection in the pool. So if a cache size of 10 is used on the abcPool, and the pool has a size of 50 then 500 open cursors will be required. Repeatable load tests will highlight any gains achieved by enabling this caching.

Review JDBC Pool SizesReview connection pool versus the number of Execute threads. Usually keep Pool size close to Execute thread size. Note: This applies to versions earlier than Weblogic 9. See detailed explanation below.

If the JDBC pool size is quite less compared to the Thread size, there is the potential to negatively impact performance quite dramatically, since threads have to wait for connections to be returned to the pool.

Your most frequently used pool should have their minimum (initial) and maximum sizes increased to the number of Execute threads plus one. This will mean there is an available connection for every thread.

One comment on pool sizing it is beneficial where ever possible to have the initial and max connections set to the same size for a JDBCPool as this avoids expanding/shrinking work that can be costly, for both the establishment of new connections while expanding the pool and housekeeping work for the pool.

However it is also recommended to monitor the JDBC pools during peak hours and see how many connections are being used at maximum. If you are not hitting the MaxCapacity, it is useful to reduce the MaxCapacity to avoid unnecessary open cursors on the database.

Note: As of Weblogic 9 and higher, Execute Queues are now

replaced by Work Managers. Work Managers can be used for JDBC pools by defining the

max-threads-constraint to define how many threads to allocate for a particular Datasource.

It is possible to run Weblogic 9 and 10 with the Execute Queues as available earlier. This is not recommended since Work Managers are self-tuning and more advanced than Execute Threads.

WebLogic Server, Version 8.1, implemented Execute Queues to handle thread management in which you created thread-pools to determine how workload was handled. WebLogic Server still

provides Execute Queues for backward compatibility, primarily to facilitate application

migration. However, when developing new applications, you should use Work Managers to perform thread management more efficiently.

You can enable Execute Queues in the following ways:

Using the command line option

-Dweblogic.Use81StyleExecuteQueues=true

Setting the Use81StyleExecuteQueues property via the Kernel MBean in config.xml.

Enabling Execute Queues disables all Work Manager configuration and thread self tuning.

Execute Queues behave exactly as they did in WebLogic Server 8.1.

Database Persistent JMS QueuesVerify whether the database architecture is such that persistent JMS queues use the same database instance as a message store as your Weblogic portal uses for data.

As the volumes on these queues increase this could significantly degrade the performance of the portal by competing for valuable CPU cycles on the database server.

1. Move the message store for persistent queues to a separate database instance from that used by most of the JDBC pools belonging to the Weblogic server. This will prevent increases in message volumes from adversely affecting the performance of the database, which would also slow the portal applications down and vice-versa.

2. Implement paging with a file-store. This allows the amount of memory consumed by JMS queues to be restricted by paging message contents to disk and only holding headers in memory. Note that this does not provide failover protection in the way persistence does and performs better with a paging file-store than a paging JDBC store.

3. It is recommended that a review is also undertaken to determine exactly which queues are persisted and whether they truly need to be. The performance gains from switching to non-persisted queues are substantial, and guaranteed delivery is not always required.

Review Number of Execute ThreadsA common mistake made by Support teams when seeing Stuck threads is to increase the number of execute threads in a single ‘default’ queue. At one time, I have worked in a project which ran the Weblogic server with 95 threads.

This figure is very high and results in a large amount of context-switching as load increases, which consumes valuable CPU cycles. Because threads consume memory, you can degrade performance by increasing the value of the Thread Count attribute unnecessarily.

Taking a Thread Dump when the server is not responding will show what the threads are doing, and help identify whether there is an application coding issue or deadlock occuring. Use Samurai to analyze these as

earlier postedIt is recommended that regular monitoring of the number of idle threads and the length of queued requests for each execute queue is set up via MBeans. This allows the Support teams to plot a graph of utilization and validation of any changed values.

Note: As of Weblogic 9 and higher, Execute Queues are now

replaced by Work Managers. Another good link is

hereUse Dedicated Execute Thread Queue for Intensive applicationsAs the number of threads is small, and if a particular application is seen to utilize a majority of the execute, the following 2 approaches are suggested to resolve the issue:

1. Review the design of the offending application to determine whether it really needs so many threads.

2. Move the offending application to a dedicated execute queue, with enough threads allocated to this queue. This will prevent it from starving the main server of threads and allow the ‘default’ queue to remain with a lower number of Execute threads. This split of Execute Queue can be done at servlet or webapp level. Mail me if you need an example, we've done both successfully in WL 8.1 and 9.

Note: However, as of Weblogic 9 and higher, Execute Queues are now

replaced by Work Managers. You can use a

Work Manager to dedicate resources at Application, Web App, EJB level.

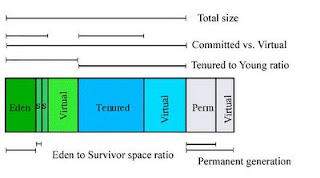

Review Java VM SettingsTuning the JVM settings for the Total Heap, and Young/Old Generations is essential to regulate the frequency of Garbage Collection on the servers.

The basic primer is on the Sun website, and a follow up of actual values and learnings is published

here. The most essential ones are Xms, Xmx for the total Heap and NewSize, NewRatio for the Young Generation. Also set PermSize and MaxPermSize appropriately to avoid consuming high memory

Other Areas1. To speed up server start times, do not delete the .wlnotdelete directories at startup - Unless you are deploying changed application jars and code.

Be aware you might occasionally see a problem shutting down the server which goes into an UNKNOWN state due to too many old temp files and wl_internal files.

You will get the dreaded error below which can only be resolved by killing the process and clearing out all temp files, .lck files etc within the domain. The files are under DOMAIN_HOME/servers/

/

weblogic.management.NoAccessRuntimeException: Access not allowed for subject: principals=[weblogic, Deployers], on ResourceType: ServerLifeCycleRuntime Action: execute, Target: shutdown

at weblogic.rjvm.ResponseImpl.unmarshalReturn(ResponseImpl.java:195)

at weblogic.rmi.internal.BasicRemoteRef.invoke(BasicRemoteRef.java:224)

2. Avoid the URLEncoder.encode method call as much as possible. This calls is not optimal in most JDKs < 1.5 and is often found as memory and CPU hotspot issue.

3. Check the network connection between WebLogic and the database. If Thread dumps show that threads are often in a suspended state (waiting for so long that they were suspended) while doing a socket read from the database.

The DBA wouldn't see this as a long-running SQL statement. This needs to be checked out at the network level.

4. Switch off all DEBUG options on Production on app server as well as web server and web server plugins.

5. Ensure log files are rotated so that they can be backed up and moved off the main log directory. Define a rotation policy based on file size or fixed time (like 24 hours)

However also note that: On certain platforms, if some application is tailing

the log at the time of rotation, the rotation fails. Stop the application tailing and reopen the tail after the rotation is complete.

6. Do not use "Emulate Two-Phase Commit for non-XA Driver" for DataSources.

It is not a good idea to use emulated XA. It can result in loss of data integrity and can cause heuristic exceptions. This option is only meant for use with databases for which there is no XA driver so that the datasources for these pools can still participate in XA transactions.

If an XA driver is available (and there is for Oracle), it should be used. If this option is selected because of problems with Oracle's Thin XA driver, try the newest version, or pick a different XA driver.

7. The <save-sessions-enabled> element in web.xml controls whether session data is cleaned up during redeploy or undeploy.

It affects memory and replicated sessions. The default is false. Setting the value to true means session data is saved and this is an overhead.

8. If firewalls are present between Weblogic server and the database or an external system connecting via JMS, this can cause transactional and session timeout issues. The session timeout on the firewall shoudl be configured to allow for normal transaction times.

In the case of JMS, transactional interoperation between the two servers can be compromised and hence it is beneficial to open the firewall between the two servers so that RMI/T3 connections can be made freely.

Any queries or clarifications, leave me a comment and I'll try to get back.